Privitera-Johnson General Exam

Kristin Privitera-Johnson

3/16/23

Producing scientific advice in a changing world

A working title

Motivation

Scientific Motivation

How do you know when you’re successful? From a single-species point-of-view you may want to know things like:

- Reference points:

- What is B and/or F?

- How does B and/or F compare to historical B and/or F?

- Population dynamics:

- How do B and F influence average net production rates?

- How do components of net production vary over time?

Broad research questions pt. 1

Broad research questions pt. 2

“All models are wrong but some are useful”

- How can analysts leverage this principle to evaluate management strategies?

- How can modelling efforts influence management outcomes?

- How have some of these modelling efforts lead to surprising management outcomes?

- What did analysts learn from these surprises (and apply that to future modelling)?

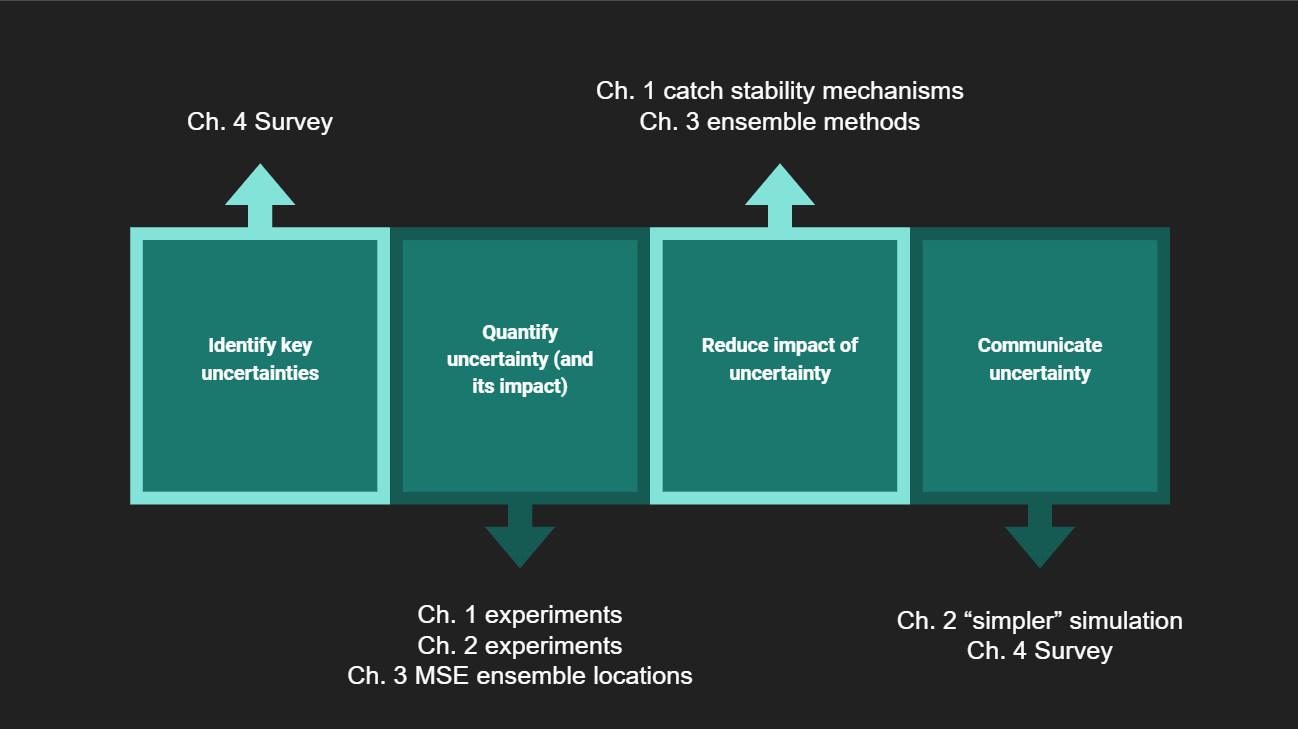

Chapter Themes

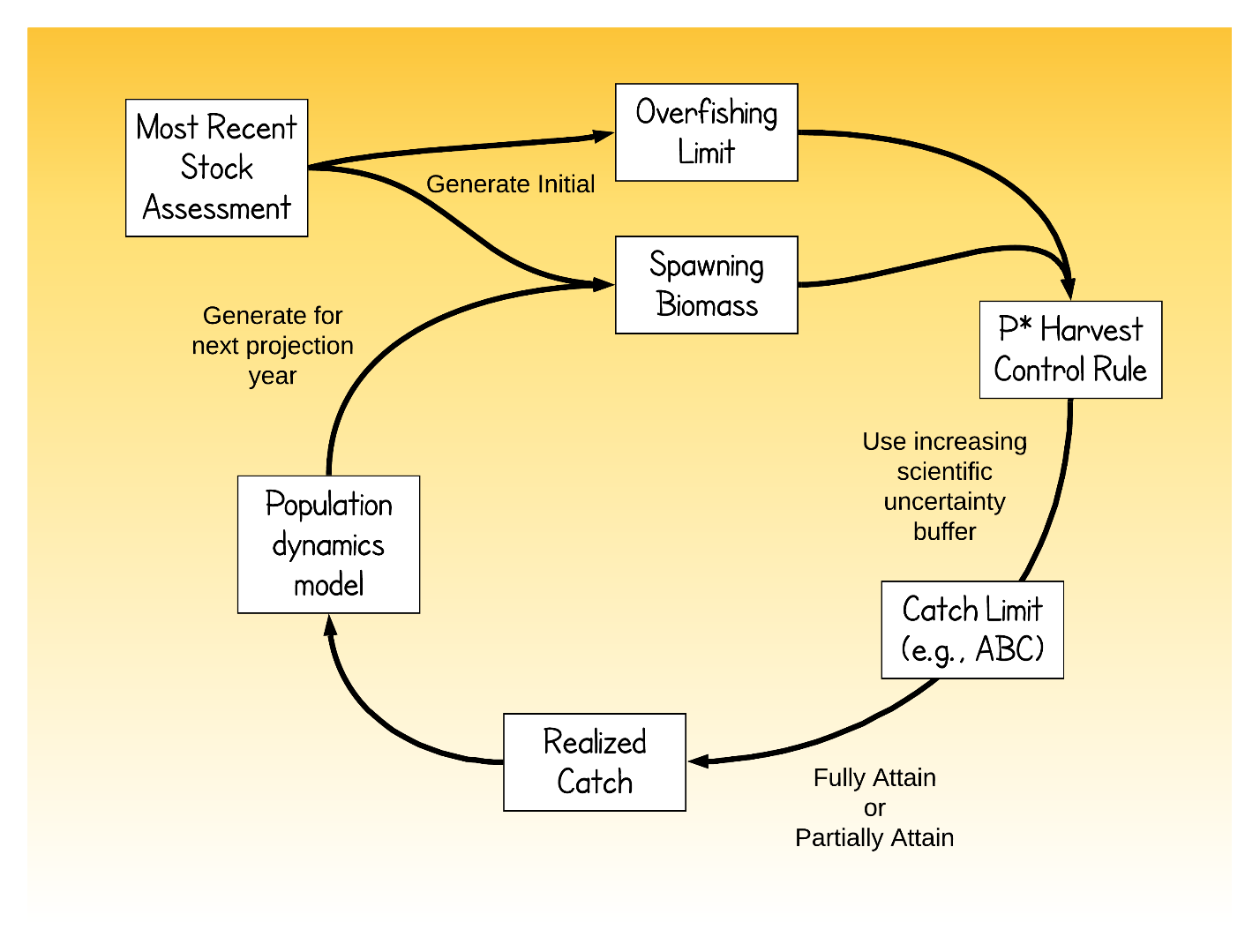

- Ch. 1: Phase-in HCR et al.

- Ch. 2: Assessment frequency vs. increasing scientific uncertainty buffers

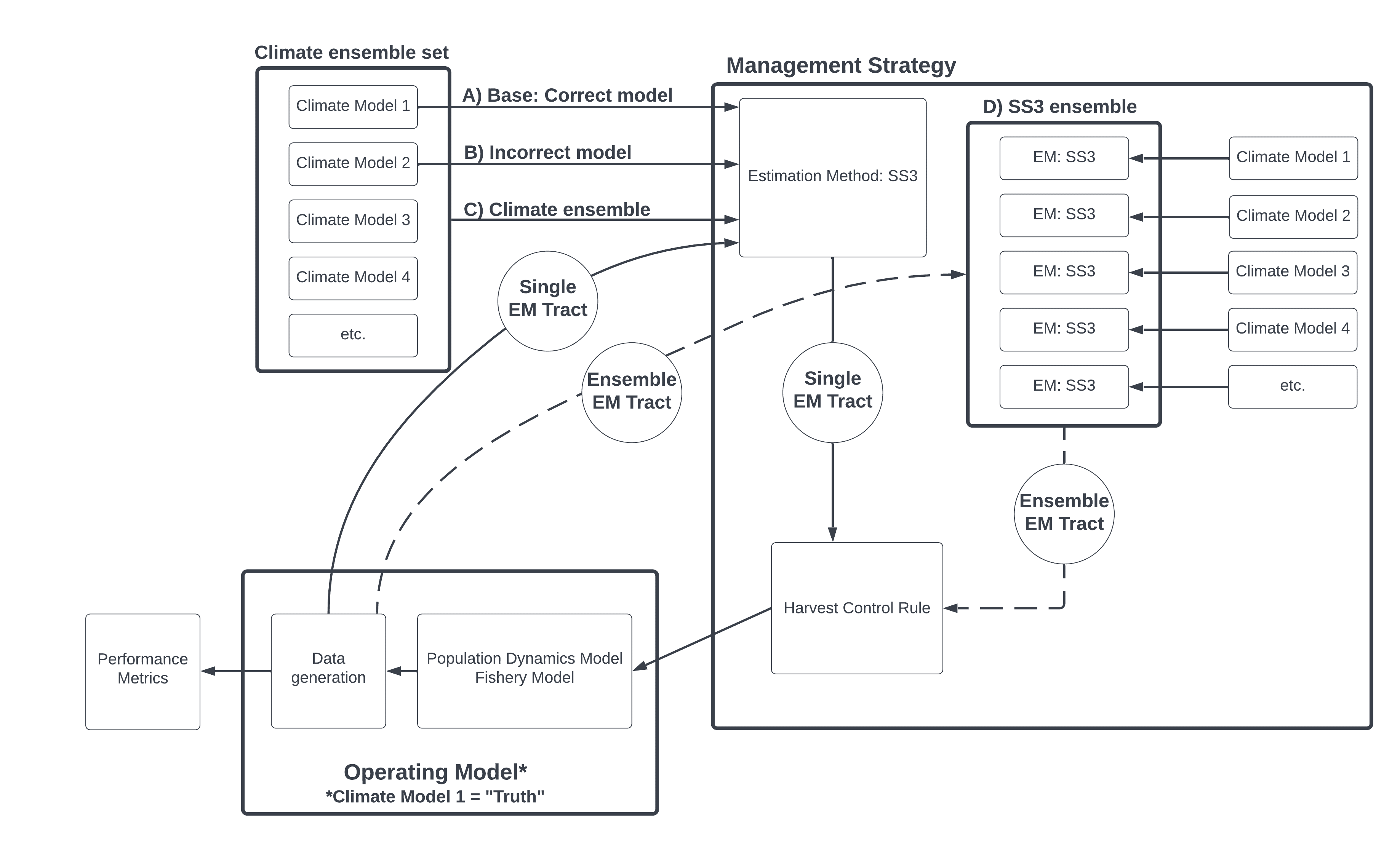

- Ch. 3: Incorporating multimodel inference into a climate-linked MSE framework

- Ch. 4: MSE Interviews

Chapters 1-2 Status Updates

- Ch. 1: Phase-in HCR et al.

- Analysis ongoing.

- Target dates end of academic year

- Ch. 2: Assessment frequency vs. increasing scientific uncertainty buffers

- Toy model stage.

- Target date 2023-2024 Academic Year

Chapters 3-4 Status Updates

- Ch. 3: Incorporating multimodel inference into a climate-linked MSE framework

- Theoretical stage.

- Target date 2023-2024 Academic Year or 2024 calendar year

- Ch. 4: MSE Interviews

- Exploring semi-structured interviews.

- Target date End of 2023 calendar year

Chapter 1

Ch. 1 Questions

- What happens to management quantities of interest when catch stability mechanisms are used to minimize interannual variation in catch when new stock assessments result in a major increase or decrease in the overfishing limit?

- How well do phase-in and catch limit restraints perform when a new assessment model leads to changes in the estimates of natural mortality, stock-recruit steepness, catch history, and selectivity form for long- and short-lived stocks?

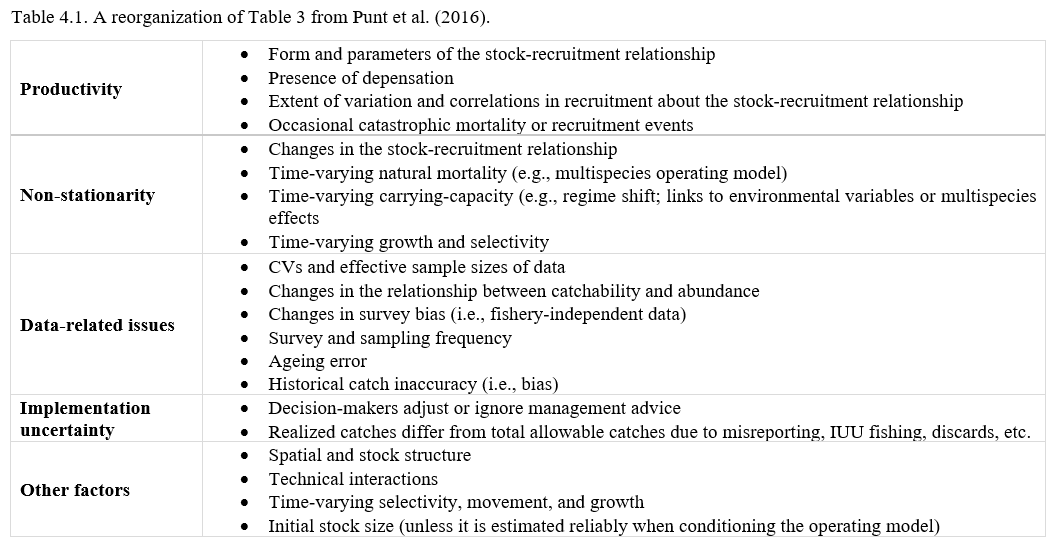

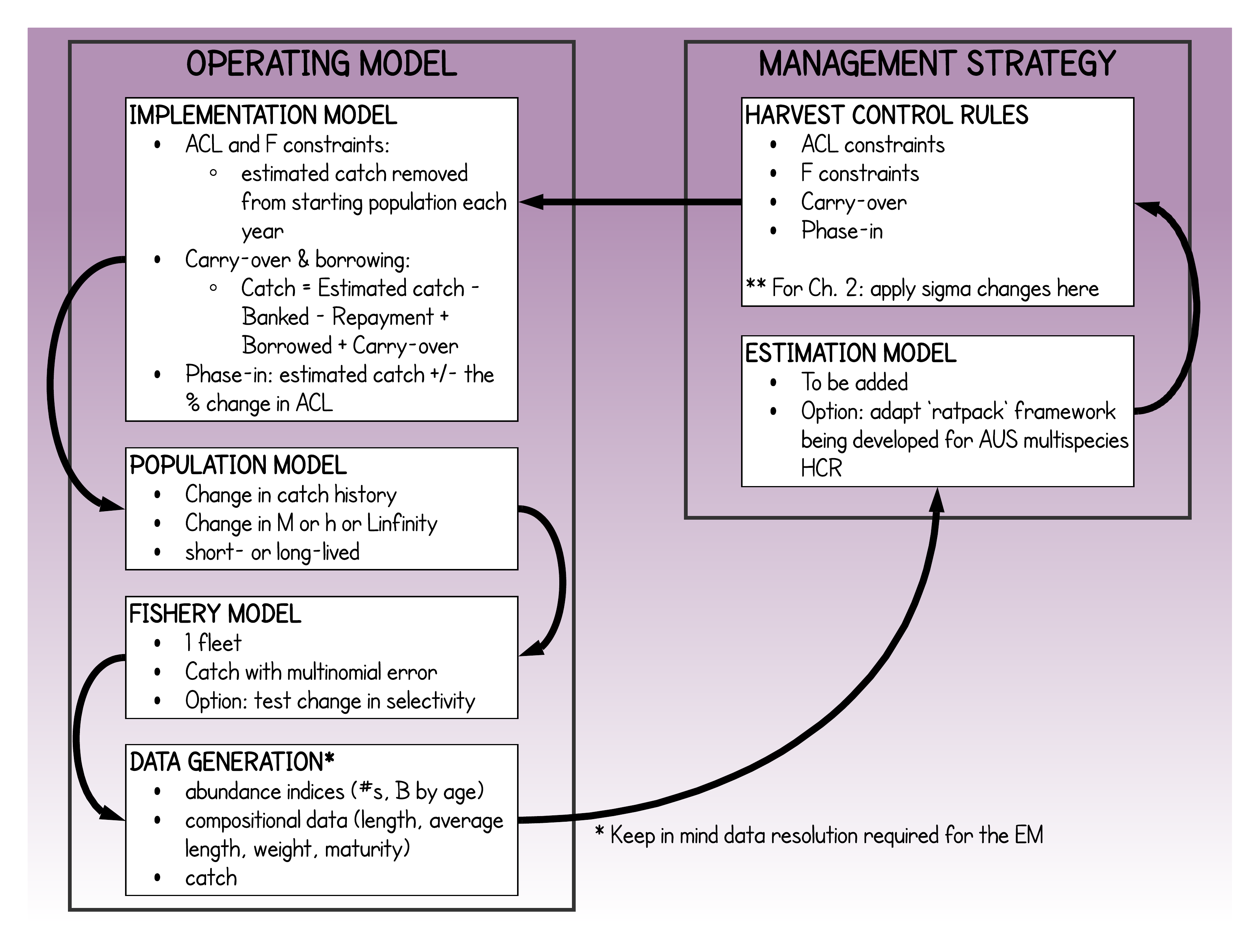

Methods Breakdown

Simulation Design

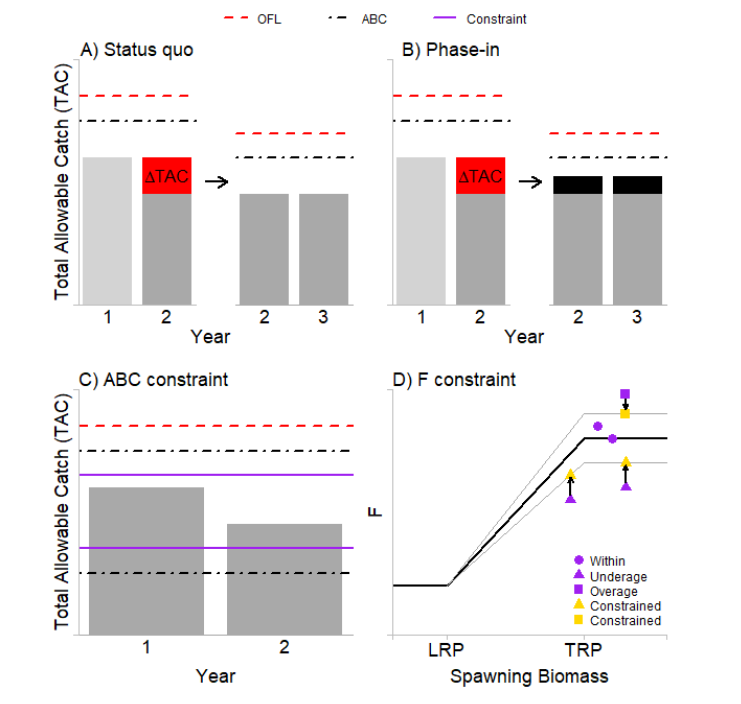

Catch Stability Mechanisms

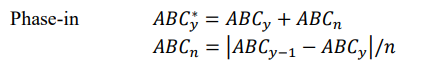

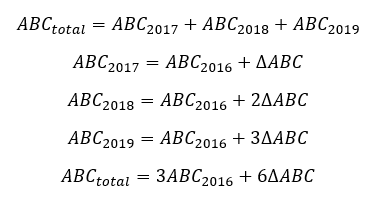

Updating the phase-in HCR

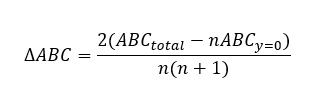

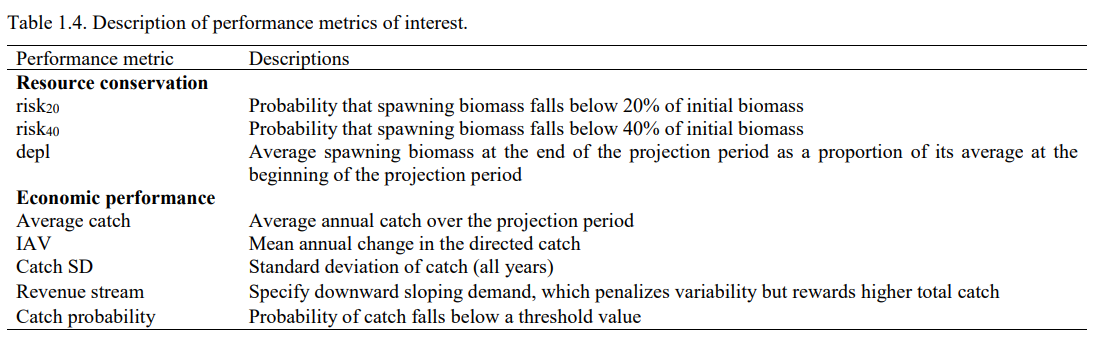

MSE performance metrics

Chapter 2

Ch. 2 Questions

What are the potential costs associated with lost yield relative to the cost of conducting another assessment within a ten-year assessment interval?

How does assessment bias (by way of assessment frequency and increasing scientific uncertainty buffers) influence management quantities of interest for various levels of attained catch for target and nontarget stocks?

- Catch limits are taken completely (100% attainment)

- Catch limits are less than the Annual Catch Limits

- Catch limits are set for a stock that interacts with another stock

Comparisons to be made

- a baseline time series generated with annual assessments and no increasing scientific uncertainty buffers

with increasing scientific uncertainty buffers:

- time series generated with new assessments every 2 years

- time series with new assessments every 5 years

- time series with a new assessment in the tenth year

Chapter 3

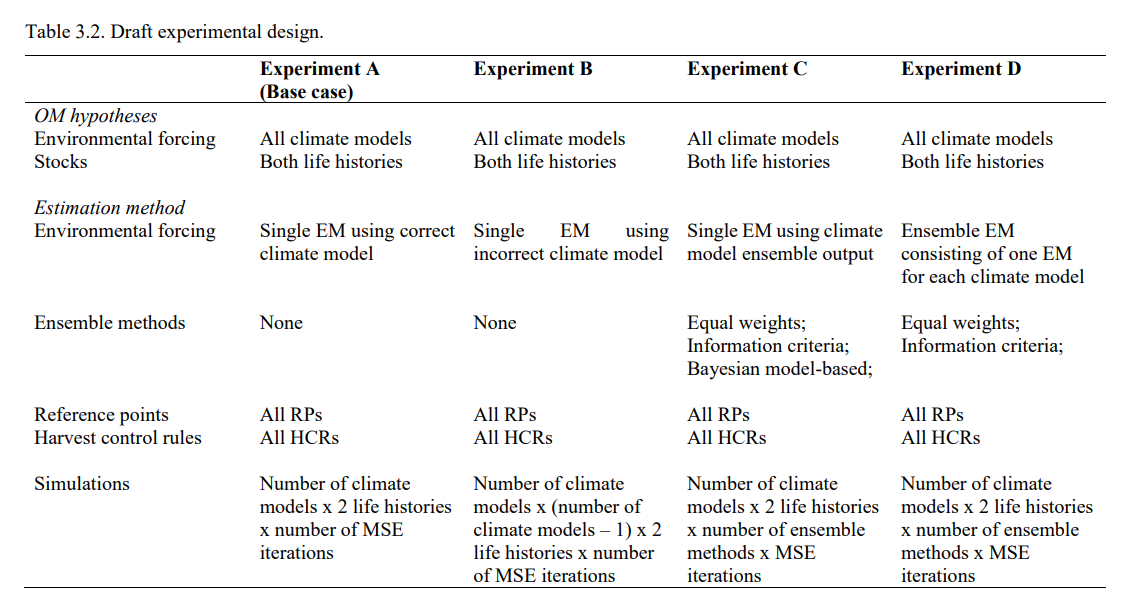

Ch. 3 Questions

- Can an ensemble modeling approach mitigate the consequences of model misspecification associated with selecting a single climate model for forecasts and assessments?

- Does it matter where along the MSE process the ensemble modeling takes place? i.e., How do the reference points change when

- a single estimation method using model output from an ensemble of climate models

- an ensemble of estimation method output consisting of one estimation method for each climate model

Methods Breakdown

Simulation Design ![]()

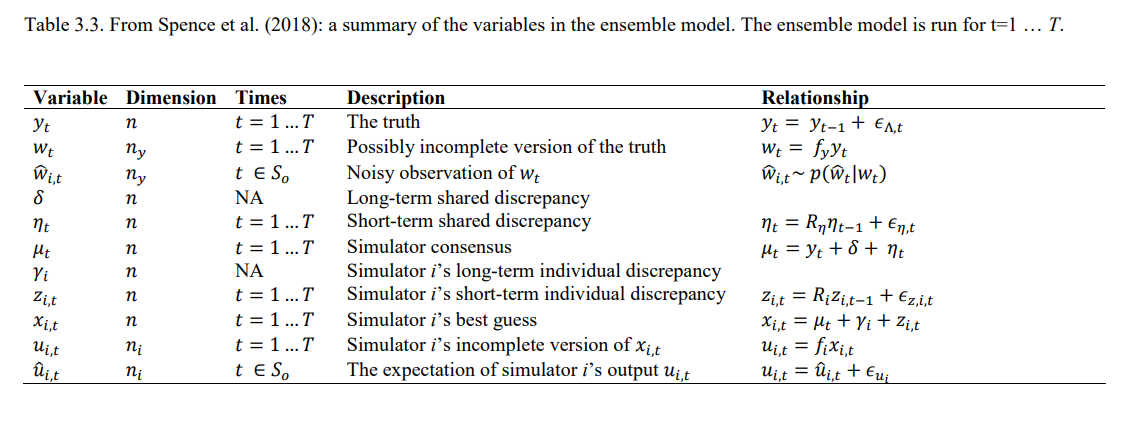

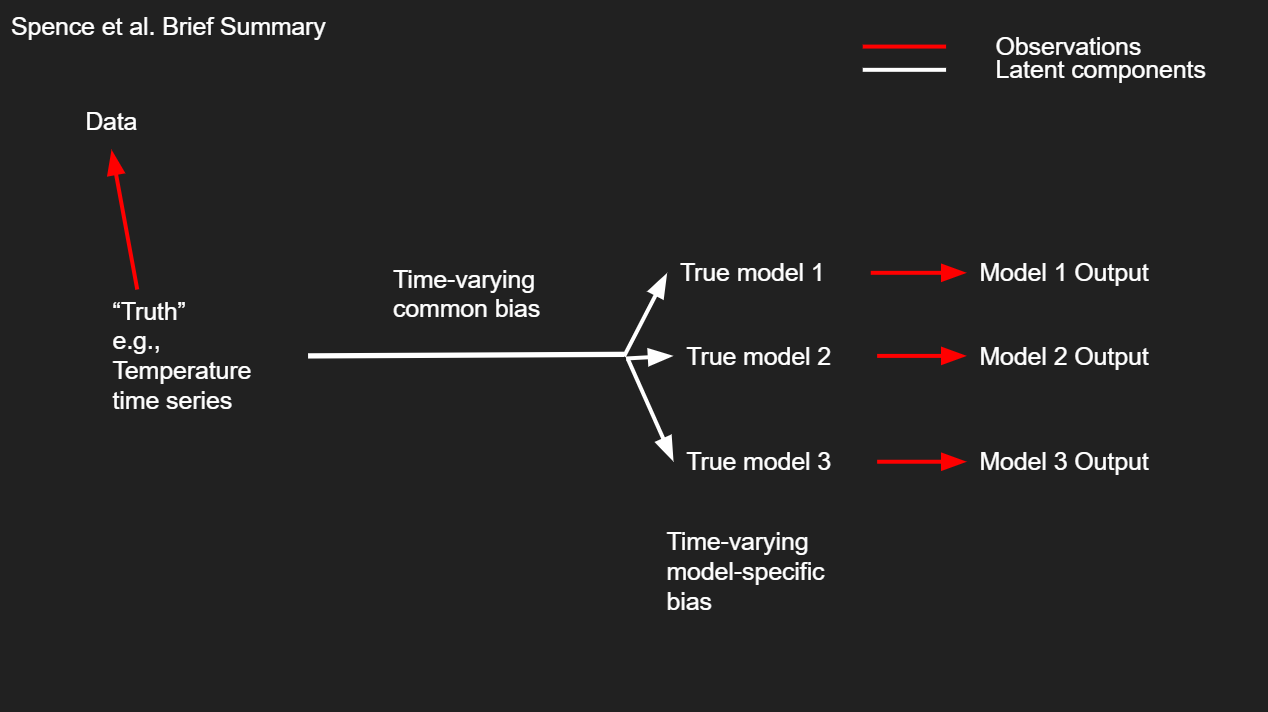

Bayesian model-based weights: Spence et al.

Bayesian model-based weights pt. 2

Potential HCRs

Chapter 4

Ch. 4 Questions

- Which factors influence the range of uncertainties addressed in an MSE?

- What are examples of implemented meta-rules for tactical MSEs?

- Which jurisdictions have conducted multiple MSEs for a fishery?

- Which uncertainties were addressed in each MSE and why?

- Are there common factors that influence the use of insufficient ranges of uncertainty when performance was inadequate or subsequent monitoring results were surprising?

Methods Breakdown

- To summarize which uncertainties were prioritized in the surveyed MSEs and how the range of uncertainties addressed changed over time and over multiple applications of MSEs and/or meta-rules for a single fishery, when applicable.

Interview themes